Let me tell you about one of the worst bugs I’ve produced (so far) in my career as a software engineer.

I remember it as if it was yesterday. Back in 2012, I was working for a company that runs an online marketplace for real estate. Many people rely on their services, for instance, to find an apartment.

Search Engine Optimization

I worked in the SEO team. SEO stands for Search Engine Optimization. It is all about making sure that this online marketplace pops up at first when you google for terms like “flat” or “apartment.”

The competition in this market was fierce. Two other noteworthy players wanted the pole position more than anything else in the world.

The thing with Google is: the first search result gets most of the relevant traffic. And traffic is the bread and butter for online marketplaces like these ones. There are no brick-and-mortar stores. Everything happens online. And Google is where the customer journey starts.

Since SEO is all about making your website look relevant, you need good unique content on your pages – and you need a lot of it.

For commonly used words like “apartment,” it takes many years to get onto the first page of Google’s search results. That’s primarily because so many companies compete for being found when the user searches for “apartment.”

The Googlebot Always Rings Twice

Twice a day, the website got a unique visitor – the web crawler bot from Google.

The Googlebot is a software that basically surfs the internet all day long. Its job is to follow all the links and to look at all the pages of the web. It scans the web page content and adds the web page to the Google Search Index, along with some meta tags.

When you’re Googling for a specific term, let’s say “apartment,” then the Google Search Engine will go into its archive and look for web pages associated with this term. It then sorts the found pages by relevance and presents the result to you. The most relevant web page appears at the top of the search result list.

In short, the Googlebot crawls the internet for new content and populates the Google Search Index. And the Google Search Engine then uses the index to quickly find relevant pages based on the user’s search term.

In our case, the Googlebot came twice a day, first at 2 am and then again at 2 pm. We could see that in our monitoring tools.

You shall not pass!

Sometimes, we don’t want the Googlebot to put our page into the Google Search Index. Website owners have different reasons for that. For instance, some don’t want to be found, and others might have pages that are not ready yet.

In these cases, we can “ask” the Googlebot to not put our page into the index. We can achieve that with a special meta tag in the web page head:

<html>

<head>

<meta name="robots" content="noindex nofollow">

<!-- ... -->

</head>

<body>

<!-- ... -->

</body>

</html>noindex means: “Please don’t put the page into your index.”, and nofollow means: “Please don’t follow any link on this page.”

With noindex, you can basically make your page transparent, and with nofollow, you can turn it into a dead-end for the web crawler.

Google’s motto was at that time, “Don’t be evil.” So there was really no reason to believe that the Googlebot would not respect our privacy. 😉

Test & Production

Most software companies have a test system (a.k.a. environment) to test new features and changes. A test system TEST is more or less a replica of the production system PROD with all the servers and databases and stuff.

A common way to reach the TEST environment as a developer is to visit test.company.com rather than company.com.

When developers are happy with what they see on the TEST system, they will then copy the changes to the PROD system. This is the moment the regular users will finally also see the changes.

The TEST environment is, as far as the Googlebot is concerned, a website like any other website. And because we don’t want to leak unfinished features or upcoming seasonal promotions to end-users, we need to tell the Googlebot to ignore the TEST environment:

<head>

{hostname.includes("test.") ? (

<meta name="robots" content="noindex nofollow">

) : (

<meta name="robots" content="index follow">

)}

<!-- ... -->

</head>It takes years to build a reputation…

One day, my team and I were busy working on some new features when the Product Owner came in. He asked if we can take this one particular page off the index. It doesn’t really support the ranking on Google.

So I did. I pulled up the file with the source code for the page, and I removed the if/else-statement because the TEST page shouldn’t be indexed and the PROD page either:

<head>

<meta name="robots" content="noindex nofollow">

<!-- ... -->

</head>At around 1 pm, my team went for lunch.

I just sat down, ready to bite into my well-earned kebab, when my phone rang.

It was the Product Owner:

“Did you tell the Googlebot to take our site off the Google index?”

Me: “Yes, that’s what you wanted.”

Him: “BUT NOT THE ENTIRE WEBSITE!!!”

Me: “What do you mean?”

Him: “All pages of the marketplace are currently set to NOINDEX! Not just the one page I asked you to.”

Uh oh.

We dropped our food down, grabbed our stuff, and ran back to the office.

If what the Product Owner just said was true, then we had less than an hour to fix the issue. Less than an hour before the Googlebot came.

Once the Googlebot sees noindex, it would remove the whole marketplace from the Google Search Index. It would take years to get back to the top of the search results. Our competitors would get all the traffic – and sales.

I started to wonder if the competition would send me a thank you card? And would the card actually find its way to Antarctica…

It’s a Team Effort

We found and fixed the problem in time. I had changed the wrong file, and we managed to release the previous version of the file with the correct instruction for the Googlebot.

The bot came and went as if nothing happened. The marketplace stayed at position one of the search results. Everything was good.

In our biweekly Retrospective ceremony, we discussed the issue and how to prevent these kinds of incidents in the future.

We settled on two action items:

1) Automated Tests

We added a missing test to our safety net. A test that was executed automatically each time we changed the production environment. It would send us an alert should the robots meta tag on PROD ever signal noindex again.

2) 4-eyes Principle

We decided that every change to our software had to be reviewed by a second pair of eyes – four eyes see more than two. We found Git Pull Requests to be super inefficient and settled on in-person code reviews.

The Automated Tests and the Peer Reviews represented a two-layer safety net. And this redundancy was super helpful in the following months and years. Not only did we catch tons of bugs before they hit the customers, but our code quality also increased a lot, and we learned a lot from each other.

Coding was never more fun.

What if?

Let’s assume for a moment, we didn’t manage to fix the problem in time.

What would be the consequences of introducing a bug that caused an outage, or some loss of data, or losing users to the competition?

Would the company fire us? Would they sue us? Would we have to pay for the loss of revenue until the end of our lives?

Most likely not.

Unless we do something evil deliberately (and get caught), it’s really difficult to prove that it was our fault alone. Software engineering is complicated, and usually, there is more than one person involved. Programmers, admins, product owners, …

It’s a team effort, and our daily work is also influenced by what others did in the past. For instance, the filenames someone chose a couple of years ago, or the tests they didn’t write.

In the end, it always boils down to the boss not having put in place an appropriate process to prevent these kinds of incidents.

That doesn’t mean, of course, that we can do whatever we want and walk around carelessly. No, we still have to give our best and pay attention to details.

But we can also not be paralyzed by a fear of breaking things. We have to have the courage to change things. Breaking things is normal. We just need to make sure that we learn from it.

Modern Agile software engineering practices such as Writing Tests, Deployment Automation, or Retrospective Ceremonies are all there for a reason. And they shouldn’t be skipped or altered on a whim. They create Redundancy, increase Quality, and make room for the Opportunity to learn from failures.

And when a team chooses good development practices, failing becomes a safe thing to do.

See you around,

-David

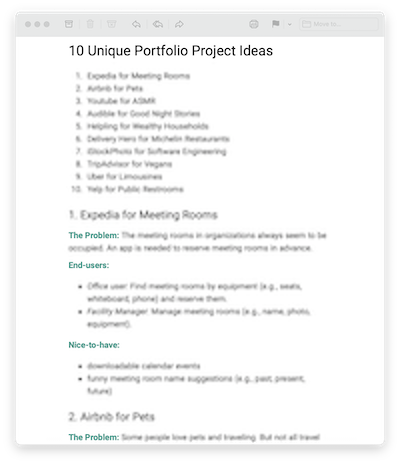

Does your project portfolio look like all the others?

Sign-up for my newsletter and get…

10 Unique Portfolio Project Ideas

Stand out from the crowd.

I’ll keep you posted with a few emails per month. You can, of course, opt out at any time.